This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

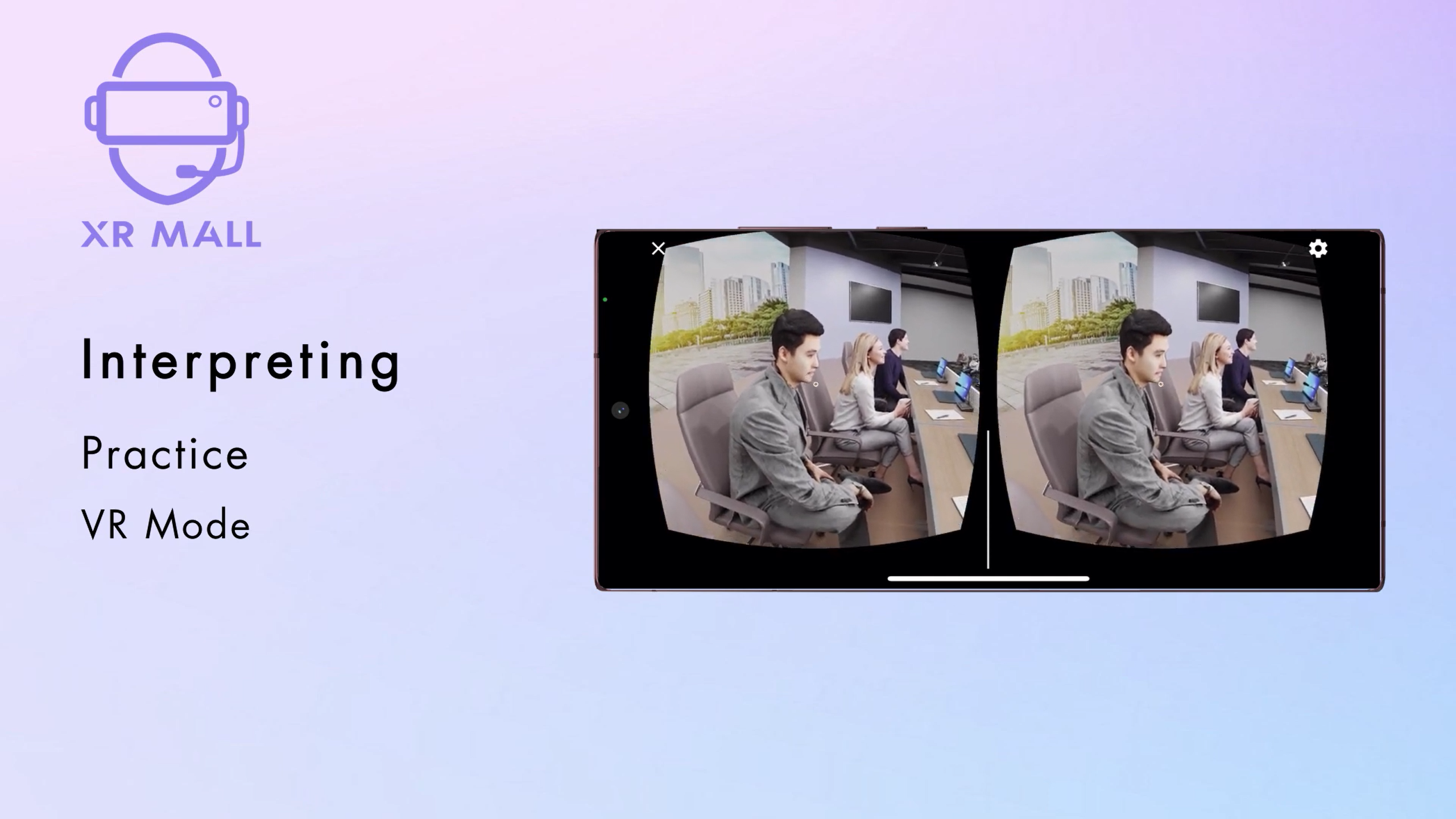

Interpreting and public speaking play an important role in facilitating and enhancing communications. Authentic contexts or real working conditions are crucial for interpreting and public speaking training. Yet, learners often encounter difficulties in having sufficient authentic and guided practice due to limited training resources and the challenges for creating authentic contexts in traditional classrooms. The use of emerging technologies — such as virtual reality (VR), augmented reality (AR) and mobile technologies — has created new opportunities for self-study and scenario-based learning, which could be beneficial to interpreting and public speaking training. Since there is currently a lack of VR/AR mobile apps for interpreting and public speaking practice available on the market, it is worth exploring the integration of these technologies to provide an alternative way to develop talents in these areas.

Interpreting and public speaking play an important role in facilitating and enhancing communications. Authentic contexts or real working conditions are crucial for interpreting and public speaking training. Yet, learners often encounter difficulties in having sufficient authentic and guided practice due to limited training resources and the challenges for creating authentic contexts in traditional classrooms. The use of emerging technologies — such as virtual reality (VR), augmented reality (AR) and mobile technologies — has created new opportunities for self-study and scenario-based learning, which could be beneficial to interpreting and public speaking training. Since there is currently a lack of VR/AR mobile apps for interpreting and public speaking practice available on the market, it is worth exploring the integration of these technologies to provide an alternative way to develop talents in these areas.